Over the last few years, researchers across fields have uncovered something that’s simultaneously humbling, frustrating, and scary: most research doesn’t hold up in subsequent analysis.

It’s called the replication crisis, and it’s an issue that has challenged psychology, engulfed economics, and been identified as a disease in field full of them (medicine).

One area where replication has not been widely discussed is sports analytics, which, while more limited in scope than the disciplines listed above, takes center stage at this weekend’s Sloan Sports Analytics Conference (SSAC), with more than 3000 practitioners, fans, and professional staffers gathering in Boston.

One of the more attractive aspects of SSAC is its research paper contest, which generally features outstanding papers, provides researchers widespread press for their work, and awards a top prize of $20000 to one submission. As a result, it was with optimism that I read that in 2017, SSAC would be doing its best to ensure the validity of its contest submissions.* Via the rules page, “research will be evaluated on but not necessarily limited to the following: novelty of research, academic rigor, and reproducibility.”

Specifically, for reproducibility, the conference asks: “Can the model and results be replicated independently?”

This is an important definition, and one that mimics the work of Prasad Patil, Roger Peng, Jeff Leek, who recently went to extensive lengths to precisely define both reproducibility and replicability. Argue Patil et al: research is reproducible if a different analyst can generate the same results using the same code and data, and research is replicable if a different analyst can obtain consistent estimates when recollecting the data and re-doing the analysis plan. In other words, look for data and code, and ideally you’ll see both.

So, how did the 2017 finalists fare by these definitions?

Not great.

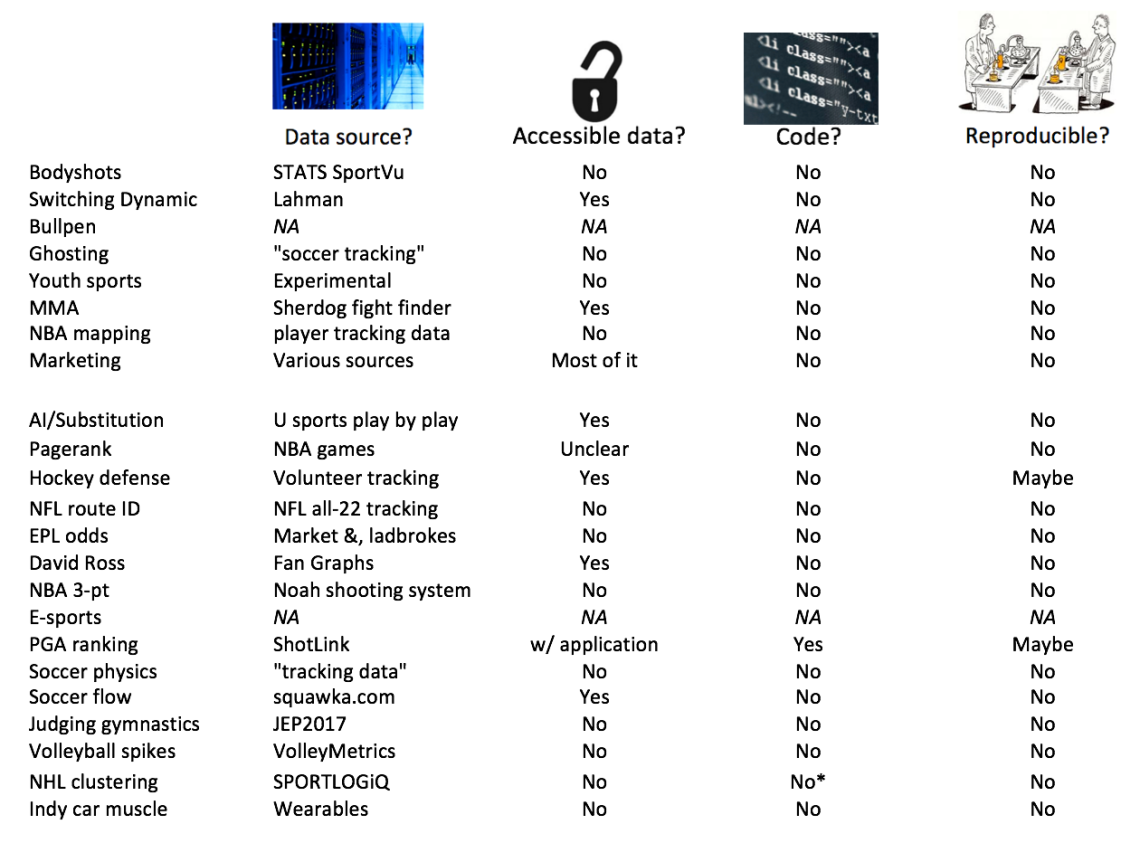

Here’s a chart summarizing the 2017 contest.** Each paper is identified by keywords from its title, and the columns reflect the data source, whether or not the data is (or appears to be) publicly available, if code was provided, and whether or not the overall paper is, by definition, reproducible. Note that two papers are yet to be posted on the SSAC site.

Summary of the 2017 Sloan research papers, including data source, if the data is publicly accessible, if code is provided, and if the paper meets the definition of reproducible

Summary of the 2017 Sloan research papers, including data source, if the data is publicly accessible, if code is provided, and if the paper meets the definition of reproducible

Of this year’s 21 listed finalists, less than half cite publicly available data that could be used by outsiders, as most submissions use proprietary data or do not give sufficient detail behind how the data was gathered. Even among those obtaining public data, however, only two (the Lahman database in an MLB paper, and a google doc from an NHL paper) are accessible without writing one’s own computer program (note that the scrapers to obtain the data were also not shared) or doing extensive searching. At best, five or six papers boast any chance of being replicable, which, sadly, is only a few more than the number of papers that don’t share any information about where their data came from.

As for code, only Adam Levin, writer of a PGA tour paper, shared some (link here). Adam also deserves credit as his data is available from ShotLink with an application. In fact, that application is as close as we get in the SSAC contest to reproducibility. With a publicly shared passing project data, Ryan and Matt’s NHL paper would appear to be the next closest. Additionally, a separate NHL paper made reference to code, but none was shown on the author’s website.

There are several consequences to the lack of openness. First, it increases the chances of mistakes. While most of these errors have likely been innocuous, there’s no way of knowing what’s real and what’s bullshit at Sloan, which means that the latter is sometimes rewarded. As one example, a 2015 presentation showed an impossible-to-be-true chart about profiting on baseball betting, capped with a question-and-answer session in which the speaker handed out free tee-shirts.*** Next, it stunts growth of the field, which is a shame because, as Kyle Wagner wrote, sports analytics been stuck in the fog for a few years running. Finally, while citations aren’t the end-goal for many SSAC paper writers, the lack of reproducible research means lower chances of paper’s being referenced in the future.

SSAC likes to point out that it’s a pioneer in its domain. Given that the growth of the sports analytics is to the best of everyone in attendance, I’d recommend that the conference either start enforcing one of the criteria it claims to look for, or lose the disguise that it cares about properly advancing and vetting research.

* In full disclosure, I’ll note that I was part of a paper with Greg & Ben (code and details here) that was rejected from the 2017 contest.

** If I’ve made a mistake in table, please let me know and I’ll update. There may be links or explainers that I missed.

*** If you were making money by betting on sports, the last thing you’d do is get up on stage at a famous conference and tell anyone about it.

Note: Thanks to Gregory Matthews for his help with this post

I understand the hesitancy to make code available, but if an author doesn’t at least describe the (unique details of) algorithms used in enough detail to allow someone else to reproduce the results, then I essentially discount the paper. Although so many sports analysis papers are based on proprietary/commercial data sets that it’s almost irrelevant anyway.

Totally agree. Perhaps I should’ve added another column to identify if the method was clearly identified. Not always the case

Sorry that you would rather people sit on interesting findings using proprietary techniques and/or data. Somehow, the rest of us don’t view it as a “crisis”.

It’s simple demand. If you limited the conf. to papers that were entirely reproducible, you’d have mind-numbingly boring papers and ~zero interest/attendance.

Concerns about misuse or diversion of proprietary data or algorithms shared for reproduction could be overcome with non-disclosure contracts, no?