(for Part II, click here)

The National Hockey League has once again made headlines for tweaking its standards of playoff qualification, this time deciding it would look into into modifying the league’s shootout system. In the current structure, a shootout concludes any 5-minute overtime session ending without a goal, with the winning team earning an extra point towards season standings

One of the major factors in the NHL’s thinking, as it turns out, is that shootouts are too random. Just today, a Toronto columnist called the shootout a coin flip.

Statisticians, including ones I have a lot of respect for, have frequently enforced this idea. In an old post on SB Nation, St. Lawrence associate professor Michael Schuckers walks through several analyses to show that the distribution of player and team performance, by and large, is no different than what we see due to chance. I really liked Schuckers’ article, and hopefully this post builds on his work to present another perspective.

Before exploring some data, let’s get a couple of important things out of the way.

First, I hate shootouts. They are the equivalent of ending an NBA game in a game of HORSE. Deciding a game by skills competitions is silly.

Second, I hate the NHL’s point system. More specifically, I hate how shootouts have a large role in dictating playoff positioning within the point system. Roughly 15% of NHL games are won on a shootout, and those extra points play critical roles in which teams qualify for the playoffs.

So while I agree the NHL’s system needs an overhaul, let’s stop calling shootouts random.

Here’s why.

1) Only a team’s best players take its shootout attempts.

In a shootout, coaches have their choice of players to take each shot. Shootouts can be decided in as few as three attempts per team, and it is rare for shootouts to last longer than 5 or 6 rounds. Unlike the Olympics, where the USA could keep trotting out TJ Oshie against Russia, NHL coaches are not allowed to use their players more than once.

But which players do the coaches use?

With near uniformity, they choose their forwards. In fact, of active players, only one of the top 70 in shootout attempts is a defensemen (Kris Letang is 42). In other words, coaches do not randomly employ players to take shootouts, and if they did, they are well aware that they’d probably lose much more often. Operating under the assumption that forwards are better at shootouts than defensemen, immediately we recognize that something is inherently not random about shootouts.

Further, if we agree that forwards are better than defensemen, is it not feasible that certain forwards are better than other ones? While these within-forward differences might not be large, it certainly plausible that they do exist. Moreover, part of the reason it might be difficult to distinguish good shootout forwards from bad ones is that bad ones stay on the bench!

Further, even after ten years of the league using a shootout, we might not have enough data to detect offensive player or team-specific shootout effects.

The same can’t be said of goalies.

2) Goalie performance is not random.

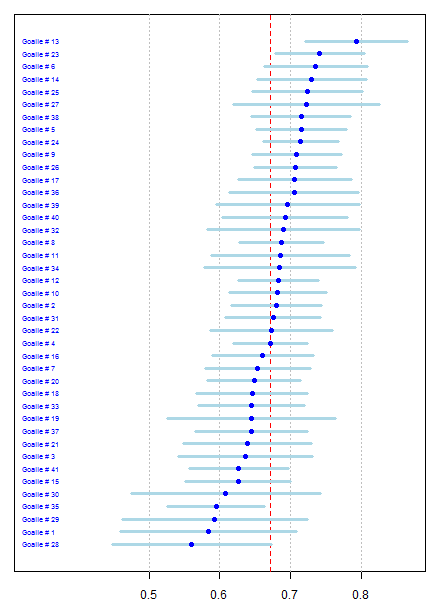

I extracted all shootout data for active goalies from NHL.com, restricting myself to the 41 goalies with at least 50 shots faced in his career. The average goalie in the NHL during this time period stopped 67.2% of shootout attempts.

Here’s a plot of the 41 goalies in my study, along with a confidence interval for shootout save percentage.

While Nicklas Backstrom (save percentage, 56%) lies well below the league average, Henrik Lundqvist (75%) and Marc-Andre Fleury (78%) perform notably better.

Of course, these fluctuations could simply be due to chance. With 41 goalies, we’d expect a few to have save percentage confidence intervals falling outside the league average.

Thus, our critical question is: Do the observed distribution of goalie save percentage deviate from what would have occurred if every goalie’s save percentage was truly 67.2%?

To answer this question, I used the total number of each goalie’s shootout save attempts (minimum of 50, and Lundqvist had the most with 150) and the Binomial distribution to simulate the number of shootout saves that each goalie would have made with a NHL average shootout save percentage.

Here’s an example of the same graph as above, only with randomly distributed save percentages (and random names, since every goalie is equal in my simulation)

Of course, one simulation by itself tells us next to nothing.

So I did this same thing 10,000 times, and at each simulation, I extracted each players critical value: i.e., the number of standard deviations his simulated save percentage lies away from the mean. A standard deviation of -1.00, for example, would indicate that the goalie’s simulated save percentage was 5.5% below the league average.

These simulations represent what would have occurred if these goalies had been observed 10,000 times at the league average save percentage. At each run, I sorted the critical values, and averaged across simulations to get a sense of how much deviation from 67.2% we would expect to see by chance.

Here’s a plot of the observed test critical values (x-axis) versus the simulated critical values (y-axis), along with a line (y = x) which represents what would have occurred if the observed distribution equaled the simulated distribution.

In the figure above, it is clear that there are goalies who are better and worse than we would expect to have seen by chance. The observed test statistics deviate from the quantiles which we would have expected if every goalie was truly equal. In other words, it is rare to have the league’s worst goalie be as bad at shootouts as Backstrom has been, and to have the league’s best goalies be as talented as Fleury and Lundqvist.

To get a sense of just how much the NHL goalies deviate from their average, I summed the absolute value of the test statistics at each simulated draw (let’s call this value SumZ). High SumZ will indicate simulations in which several critical values fell far away from 0, while low SumZ will indicate one where most critical values fell close to 0.

Here’s a histogram of the simulated SumZ values, along with a point representing the observed SumZ of 46.9.

In only 11 of the 10,000 simulations were NHL goalies as random as the variability which has been observed. This extremely low probability provides strong evidence that goalie performance is not due to chance.

Of course, if goalie performance isn’t random, then shootouts aren’t, either.

To conclude, while there are certain aspects of a shootout – specifically, that offensive performance might be highly variable from year to year, and that the players we expect to be great at shootouts might be terrible at them – this post provides two examples of shootout aspects which do not appear to be due to chance.

For what its worth, I looked at the win percentages of the goalies in our sample. Fleury and Lundvquist, our top two netminders, have combined to to win 91 of 142 of their games (win percentage, 64%), while Backstrom, our worst goalie as judged by save percentage, has won just 22 of 55 (40%).

Using these numbers, and assuming a team goes to the league average 13 shootouts in a season, the difference between a top goalie and a poor goalie is about 3 or 4 points alone, simply due to their shootout performances.

(for Part II, click here)

Reblogged this on Stats in the Wild.

Interesting. But what’s the chance a better goalie will outperform a lesser goalie in a shootout? Say a +1 SD goalie vs a -1 SD goalie? Seems like it would be very close to 50/50. Say it’s 52%. That means a shootout is 50 parts luck compared to 2 parts skill, or at least that’s one way to look at it. I’d be curious to know what the actual number might be from your simulations. But if it’s just barely above 50%, I’d say it’s fair to call shootout outcomes random. Of course, any game in its entirety is significantly random, so there’s that.

I’d suggest directly computing the expected binomial variance and then subtract the observed variance. That’s the maximum variance that could be attributed to skill. You could compare the ratio of var(skill)/var(binomial) of shootout game outcomes to non-shootout game outcomes to get a solid idea of just how much more random shootouts are than a typical game.

Good questions, Ted. I’ll look at the effect of goalie performance today.

Who was arguing that all goalies have the same skill level?

IMO, arguing that shootouts are random is implying that it doesn’t matter which offensive players or goaltenders to employ

Cool piece, and it’s nice to have some corroboration of something I wrote a while back (http://clownhypothesis.com/2014/01/01/is-a-goalies-shootout-performance-meaningful/).

Isn’t it a bit strawmannish, though, to argue against the idea that the shootout is random? I think the (valid) concern is mostly that the link between winning in regulation and winning a shootout is much more tenuous than we’d like.

Also, are you using only home data by any chance? The link you provided lists Henrik with 307 shootout attempts against.

Thanks, Clown (can I call you that?)

I’d agree that its a bit strawmanish, but I grew sick and tired of the “shootouts are random” headlines, especially when they were appearing in major media outlets. Even if most of the shootout is due to chance, there is still some skill involved.

Do you have a better data set? Would love to see. I only could find active players

My name’s Frank, the comment just shows up as my WordPress UID.

I think a good way to get better data is to scrape it on a season-by-season level from either the NHL or ESPN. I think Tango’s post links to someone who already aggregated these data up until 2012, so it’s missing a season or two.

“Backstrom, our worst goalie as judged by save percentage, has won just 22 of 55 (40%).” this seems extremely high. What is the mean win % in shootouts? Also do you think that the reason people think that shootouts are random is due to high amounts of parity?

Well, I left it a little open ended here, but I’ll try and answer the question of “How much difference can a good goalie make” today. Good questions

“Using these numbers, and assuming a team goes to the league average 13 shootouts in a season, the difference between a top goalie and a poor goalie is about 3 or 4 points alone, simply due to their shootout performances.”

That’s the difference between the top observed performance and the bottom observed performance, but surely we imagine that the differences in skill — while demonstrably non-zero — must be much less than the observed performances, right?

That is, you might guess that Fleury and Lundqvist are very likely to be above average, but I’d assume they’re still very unlikely to be 64-win-percent true talents in the long run.

Yes, I should have clarified this point. What I meant to say was that their past performances have been worth 3-4 points a year. This doesn’t hold for future performance, of course.

Haha, typical internet failure. I read a nice, thorough study that updates and improves on past work, and forget to say anything complimentary, just jumping right to the critique of a point that is in some ways minor.

Sorry. This is nice work. I completely agree that the shootout isn’t purely random; what I think remains to be tested is how much practical significance there is in the differences between skills — how much of an edge a team could get.

So this is good stuff. Shouldn’t be a surprise that Goalies were different eventually given their larger sample size. Couple of statistical suggestions.

1.Straight CI’s are not appropriate here due to multiplicity and so your bars should be a good bit wider even using something less conservative than Bonferroni.

2. You can get a good deal of information by estimating the slope from your qqplot. I think, 1/slope should give you an idea about the relative variability in these goalies to the theoretical.

My visual estimate says a slope of about 1.22 which would suggest about a 20% effect of skill.

3. Do the results change if you include all goalies?

I agree on the CI’s, Michael. My intent was that simulating random seasons would give me a better sense of what counts as extreme performance, but conservative CI’s would’ve been appropriate, too.

Slope is about 1.3, so your visual estimate was pretty close. Hadn’t thought of looking at that.

I set a different cutoff of 10 shootout attempts, and re-ran the same numbers. The graphs pretty much looked the same, but things did move slightly closer to a coin flip in the simulation: Specifically, in 2.5% of simulations was the existing netminder distribution exceeded by the simulated numbers.

That’s okay. It’s everyone’s intuition when they read something wrong to point that issue out!

Thanks for the kind words, and I agree, there’s plenty of work left to be done.